In 1968, the software industry was in crisis. Software projects had grown too large and too complex for existing development practices, a fundamental problem that engineers at the NATO Software Engineering Conference called the “software crisis.” Four years later, Edsger Dijkstra published his essay on Structured Programming. He described the challenges the industry was facing:

The major cause of the software crisis is that the machines have become several orders of magnitude more powerful! To put it quite bluntly: as long as there were no machines, programming was no problem at all; when we had a few weak computers, programming became a mild problem, and now we have gigantic computers, programming has become an equally gigantic problem.

Source: The Humble Programmer by Edsger Dijkstra (1972)

Now, over half a century later, we find ourselves hurtling towards a second crisis. However this time, the crisis is driven by the invention of large language models which have given computers the capability to program themselves at a scale which will soon surpass the ability of most human programmers. As we scramble to make sense of this new era of computation, I believe we can draw inspiration from Dijkstra and others who helped shape the abstractions that we take for granted today and invent a whole new set of abstractions for programming with natural language.

To paraphrase Dijkstra, when we had a few weak language models, prompt engineering became a mild problem, and now we have gigantic language models, prompt engineering has become an equally gigantic problem. When language models had small context windows we came up with heuristics to pack as much relevant context in as possible. Now, the context windows often surpass the available context and we find ourselves with a whole new set of problems as long-running agents get overwhelmed with the details of million-token prompts and hundreds of tools at their disposal, chasing their own tails and compounding their errors.

Punch Cards and Tokens

Looking back at the state of computer programming in the 70’s, we can see some interesting similarities to how we do prompt engineering nowadays. Code was written as a series of low-level machine instructions on punch cards which were fed to the computer one-at-a-time and executed in order.

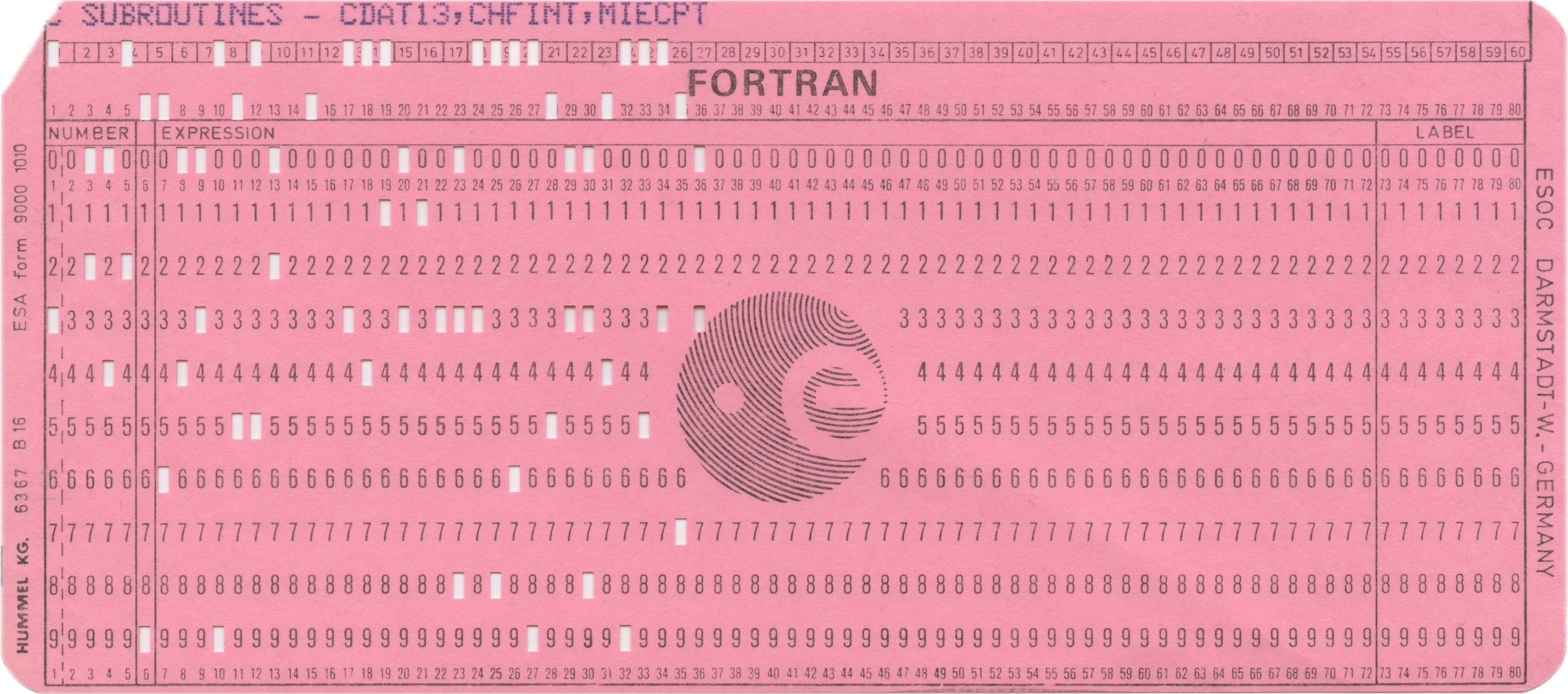

Figure-1: A punch card

with 12 rows and 80 columns. Programmers would punch each instruction into the card in the order that they wanted them to be executed.

Source:

Wikimedia Commons

Programmers would compile decks of these punch cards in shoe boxes wrapped with elastic bands to make them easy to retrieve and feed back into the machine. This was the early origins of the subroutine: a set of reusable instructions. It would still take some time before these subroutines would get their own arguments, scoped variables and return values.

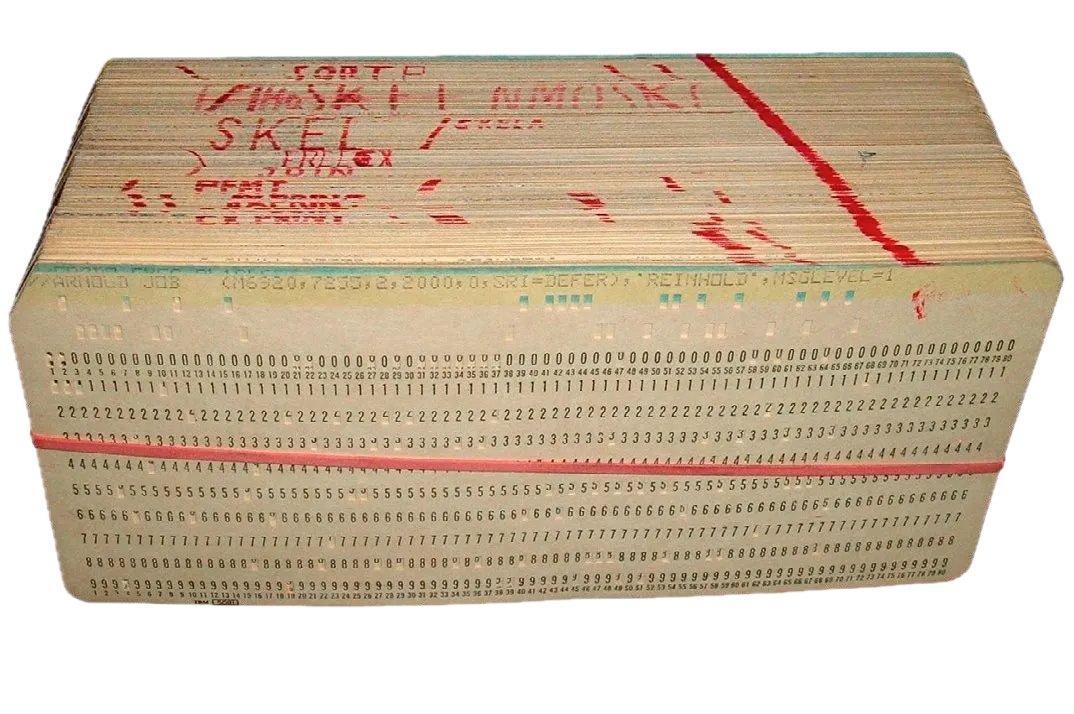

Figure-2:

A deck of punch cards representing a complete computer program. Each card would be fed one-at-a-time into an optical reader to convert them into machine code for the computer to process.

Source:

Wikimedia Commons

Note the diagonal line drawn across the deck. This is a “sorting line” so that you could quickly spot if the cards were out of order before feeding them into the machine in the wrong order and wasting time and money on the wrong code.

Modern language models similarly accept all input as a series of low-level tokens where each token is assigned a positional encoding so that the language model can keep track of the order. As prompts have gotten longer and more deeply embedded in our workflows we’ve seen programmers compiling sets of reusable prompts which they can feed back into the language model to trigger common tasks like fixing unit tests or updating documentation. Eventually these practices became formalized into things like slash commands which accept arguments and subagents which run within their own scope and can return values back to the main agent thread. We’re rediscovering the value of structured programming in a whole new context.

Context Management

Structured Programming by O.-J. Dahl, E. W. Dijkstra, C. A. R. Hoare (1972)

The core argument of Dijkstra’s Structured Programming was that computer programming wasn’t just about automating calculations to make them faster and cheaper. The art of programming was actually an intellectual challenge about how to organize complexity. This meant programmers should be thinking more deeply about things like control structures, data structures and how they could make code easier to read, maintain and reason about.

As language models increasingly take over the task of writing the conditional statements, loops and subroutines, our role as programmers transitions to higher levels of abstraction and the art of programming once again becomes the challenge of how to organize complexity. How do we think about these new natural language abstractions beyond just ways to make prompting faster and cheaper? How do we design natural language specifications of software to be easier to read, maintain and reason about?

Looking at how prompts and agents have evolved over just the past couple of years, a familiar pattern emerges. We’re following the same progression that took us from unstructured machine code to structured programming but this time the code is written in natural language. We started with all the code in one deck of cards, executing sequentially. Then we learned to break things apart, to create reusable components, to build systems from composable pieces.

Similarly, AI developers started by putting everything in one large prompt. Like the labels scribbled on punch card decks, we add XML tags or Markdown notation to make it easier to organize but there are no real subroutines with their own variable scopes. Everything executes sequentially from start to finish.

Figure-3: In-context prompting adds all the context to the prompt followed by the agent steps. The agent uses the LLM’s attention mechanism to decide which parts of the context to reference in its decisions.

As LLM context windows grew longer, models faced a new challenge: having all available context visible simultaneously became overwhelming and inefficient. Tool calling emerged as a solution, introducing a mechanism similar to function calls in traditional programming. Rather than embedding all possible actions and data directly in the prompt, models can now invoke specific tools on demand, treating them like system calls that execute external operations and return results.

The Model Context Protocol (MCP) takes this further by acting as a system call API layer for LLMs. Just as an operating system provides system calls for file I/O, network access, and process management, MCP servers expose discrete capabilities through a consistent protocol. Models can call into these capabilities when needed, accessing external resources, querying databases, fetching data, and performing actions, while keeping the context window focused on the immediate task rather than cluttered with every possible capability.

Figure-4: Tool calling and MCP servers add a level of indirection between the agent and the context. The agent constructs tool calls with parameters, and then those are used to pull in only relevant context where it’s needed.

However, each tool call adds more context to the agent history, and eventually we end up back where we started: overwhelmed with too much accumulated context distracting the model from its current task.

Recent techniques attempt to alleviate this through compacting. Like batch processing systems that would clear the machine’s memory between jobs, these techniques summarize or drop parts of the agent’s history. There’s still more research needed into efficiently compacting the agent history. Today’s tools require the user to manually trigger the context compacting. A more capable system would be able to keep track of which context is attached to which subtasks and discard it automatically when the subtask is completed.

Figure-5: For tasks where we just need to know the results of previous tasks and not every detail of how those results were obtained we can feed all the previous steps into the LLM and prompt it to summarize those steps. Then the agent can discard those steps while keeping the summary in context.

Recently, Claude Code introduced subagents which run in their own agent trajectory with their own context. This is the closest that we’ve come to proper subroutines with local scope and a call stack. The agent calls the subagent tool to push a task onto it with parameters, the subagent executes in isolation with only the context it needs, then returns a value and pops off the stack.

Figure-6: Subagents are just a specialized tool call with its own parameters which returns context back to the agent. But instead of the tool being a black box, it’s another agent with its own prompt and access to tools; including the tool which can launch further subagents.

This progression mirrors what Dijkstra and others achieved in the 1960s and 70s: from linear execution to reusable code blocks to efficient memory management and structured programming with proper scope isolation. The core ideas behind the abstractions which made programming manageable in the machine code era (subroutines, scopes, call stacks) are proving equally essential now that we’re programming with natural language.

New Abstractions for Managing Complexity

New context management and planning techniques like subagents and task lists are more than just ways to make agents faster or more accurate; they give us ways to read, maintain, and reason about programs written in natural language.

Making agent systems readable

Subagents help us decompose complex problems into manageable subtasks. When a parent agent delegates a task to a subagent, that subagent gets its own context window containing only the information that is relevant to its specific task: no broader system prompt, no history of previous attempts, no details of sibling tasks.

Instead of searching for a needle in a haystack of long agent trajectories which grow with every tool call, we can read and understand each specialized subagent in isolation. Subagents can call out to even more specialized subagents as needed, with each level maintaining its own scope, creating a hierarchy we can navigate through. But this also raises fundamental questions: What are the right boundaries between subagents? When should context be shared versus isolated? How should complex data structures be passed between subagent layers?

Making agent systems we can reason about

Task lists turn agent planning from an opaque process into explicit, logical steps we can follow and verify. Claude Code’s task list tool externalizes the agent’s planning process, turning “what should I do next?” from a complicated reasoning step into a concrete, inspectable artifact.

Figure-7: Many agents now have task list tools available to them. This allows them to decompose a task into a detailed plan at the start and check off items as they work through them one-by-one. The task list doesn’t need to be static. As new information comes to the light the plan can be amended.

Task lists complement on-the-fly agent decision-making with explicit planning that we can reason through. You can see what the agent planned to do, what it actually did, and where it diverged from the plan. The decomposition strategy becomes something you can analyze and verify rather than a black box emerging from a series of tool calls. This visibility provides the foundation for incremental improvements - we can finally ask “is this the right plan?” rather than just “did it work this time?” There’s still a lot of work to do in this area like being able to trace the elements of an agent plan back to the context which informed it so that we’re not just fixing the plan but also refining the context.

Making agent systems maintainable

The combination of visible planning and task decomposition leads to systems we can improve over time. Subagents with clear interfaces can be swapped out for alternatives or reused across different contexts. Task lists make it possible to identify exactly where an agent’s approach went wrong and adjust either the planning strategy or the execution.

But the broader challenge of agent maintainability remains. Dijkstra emphasized that structured programming wasn’t just about making code run but about making code that you could prove was correct and maintain over time. Similarly, structured prompting isn’t just about getting agents to complete tasks once, but about creating systems we can systematically validate and improve. This requires more than just better abstractions. We need ways to review long agent trajectories, update prompts based on what we learn, and track which changes improve performance. Once we have tools that help us debug natural language specifications, how do we refactor them and improve them? These practices for iterative refinement of agent systems are still emerging.

Developing these new tools and abstractions will define how we build with AI for the next fifty years. As Dijkstra recognized, the fundamental challenge remains the same across eras: how do we organize complexity in ways that humans can understand, maintain, and reason about? I believe that the answer involves the same core concepts whether you’re writing assembly instructions on punch cards or orchestrating AI agents with prompts.